The post a week ago Monday announced the release of the Heat resource plugin for VMware OpenStack and VMware Big Data Extensions. As I was working through the final issues before the plugin was released and the initial blog post announcing it, I realized it may be helpful to describe how the OpenStack Heat resource plugin works. The initial code for the plugin was rather simplistic and based pretty closely off of the Python scripts I wrote to test the Big Data Extensions REST API. Once testing of the resource plugin began it became evident far more code was going to be necessary to be successful. This blog post will describe the workflows inside the resource plugin, the challenges that I encountered and where improvements could be made in the future. My ultimate hope for the resource plugin is for VMware to take the framework and develop a supported OpenStack Heat resource plugin for everyone to consume.

Basic Workflow

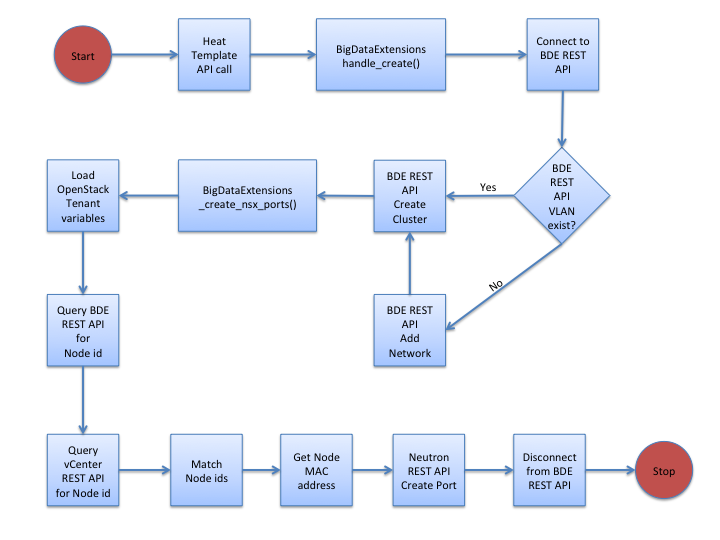

The basic workflow for the resource plugin is not as simplistic as I had hoped. Due to the integration of VMware OpenStack with VMware NSX-v, there were several additional API calls required before the Big Data Extension instances would be accepted into the fold and allowed to communicate over the network.

You can see from the figure above the number of API calls required to create a new cluster and allow it to communicate with the Big Data Extensions management server.

Properties

The resource plugin requires several variables in order to operate properly. These variables can then be specified within the JSON Heat template. The following variables are setup:

48 properties_schema = {

49 BDE_ENDPOINT: properties.Schema(

50 properties.Schema.STRING,

51 required=True,

52 default='bde.localdomain'

53 ),

54 VCM_SERVER: properties.Schema(

55 properties.Schema.STRING,

56 required=True,

57 default='vcenter.localdomain'

58 ),

59 USERNAME: properties.Schema(

60 properties.Schema.STRING,

61 required=True,

62 default='[email protected]'

63 ),

64 PASSWORD: properties.Schema(

65 properties.Schema.STRING,

66 required=True,

67 default='password'

68 ),

69 CLUSTER_NAME: properties.Schema(

70 properties.Schema.STRING,

71 required=True

72 ),

73 CLUSTER_TYPE: properties.Schema(

74 properties.Schema.STRING,

75 required=True

76 ),

77 NETWORK: properties.Schema(

78 properties.Schema.STRING,

79 required=True

80 ),

81 CLUSTER_PASSWORD: properties.Schema(

82 properties.Schema.STRING,

83 required=False

84 ),

85 CLUSTER_RP: properties.Schema(

86 properties.Schema.STRING,

87 required=True,

88 default='openstackRP'

89 ),

90 VIO_CONFIG: properties.Schema(

91 properties.Schema.STRING,

92 required=True,

93 default='/usr/local/bin/etc/vio.config'

94 ),

95 BDE_CONFIG: properties.Schema(

96 properties.Schema.STRING,

97 required=False,

98 default='/usr/local/bin/etc/bde.config'

99 ),

100 SECURITY_GROUP: properties.Schema(

101 properties.Schema.STRING,

102 required=False,

103 default='9d3ecec8-e0e3-4088-8c71-8c35cd67dd8b'

104 ),

105 SUBNET: properties.Schema(

106 properties.Schema.STRING,

107 required=True

108 )

109 }

NSX-v Integration

The integration with NSX-v was a multi-step process and honestly took me nearly a full week of testing to sort out. I simply could not have worked through all of these steps were it not for the awesome VIO and BDE engineering teams at VMware who offered guidance, suggestions and screenshots on where NSX-v was hooked into the instances (or needed to be). I am hoping to see them at VMworld this year so that I can buy them dinner for all the help they provided.

Before we go any farther, let me state writing code in Python is not yet a strength for me. In fact, this project was really my first significant foray in using Python — so forgive me if the code is horrid looking.

Identifying the vDS Portgroup

The first piece that had to be identified was the UUID of the vDS Portgroup and having the resource plugin make that Portgroup available to Big Data Extensions.

289 # determine actual NSX portgroup created

290 # hack - regex in Python is not a strength

291 mob_string = '/mob/?moid=datacenter-2'

292 curl_cmd = 'curl -k -u ' + bde_user + ':' + bde_pass + ' ' + prefix + vcm_server + mob_string

293 grep_cmd = " | grep -oP '(?<=\(vxw).*(?=" + network + "\))' | grep -oE '[^\(]+$'"

294 awk_cmd = " | awk '{print $0 \"" + network + "\"}'"

295 full_cmd = curl_cmd + grep_cmd + awk_cmd

296

297 p = subprocess.Popen(full_cmd, stdout=subprocess.PIPE, shell=True)

298 (net_uid, err) = p.communicate()

299

300 # Check to see if network_id is as we expect it

301 if 'vxw' in net_uid:

302 network_id = net_uid

303 else:

304 network_id = "vxw" + net_uid

305

306 network_id = network_id.rstrip('\n')

307

308 # Authenticate in a requests.session to the BDE server

309 curr = self._open_connection()

310

311 # Should check to see if network already exists as available network

312 # This logs a big fat error message in /opt/serengeti/logs/serengeti.log

313 # when the network doesn't exist.

314 header = {'content-type': 'application/json'}

315 api_call = '/serengeti/api/network/' + network

316 url = prefix + bde_server + port + api_call

317 r = curr.get(url, headers=header, verify=False)

318

319 # Add new network to BDE as an available network if check fails

320 payload = {"name" : network, "portGroup" : network_id, "isDhcp" : "true"}

321 api_call = '/serengeti/api/networks'

322 url = prefix + bde_server + port + api_call

323 r = curr.post(url, data=json.dumps(payload), headers=header, verify=False)

324 logger.info(_("VirtualElephant::VMware::BDE - Network creation status code %s") % r.json)

As the code shows, determining the actual name of the vDS Portgroup required taking the UUID — that was passed into the resource plugin as a reference from the JSON file — and parsing HTML output from a call to the MOB. Depending on how many Portgroups existed within the vCenter, it also meant having to deal with additional HTML code that hid the complete list of Portgroups from view unless a user clicked a hyperlink. There is probably a better way of grep’ing and awk’ing in Python, but old habits from decades of writing Perl code are shining through there. You may also notice the MOB link is hard-coded (line 291), this has to be changed sometime soon and is not a sustainable method for using the resource plugin in an enterprise environment.

When writing the plugin, I had hoped this was all that would be needed in order for the BDE nodes to be able to tie into the NSX portgroup and be capable of passing traffic. Unfortunately, that was not the case and I had to also figure out how to add the MAC addresses of every node, through Neutron, into NSX-v.

Creating Neutron ports for NSX-v

The method is called after the BDE create cluster command is sent to the management server. The method is defined in the code on line 139:

139 def _create_nsx_ports(self):

After getting the necessary OpenStack Neutron credential information, the method starts by gathering the node list from BDE in order to match the node name in BDE and the corresponding name in vCenter. This is necessary because BDE relies on vCenter to track the MAC addresses of the nodes created.

192 # Get the node names for the cluster from BDE

193 curr = self._open_connection()

194 header = {'content-type': 'application/json'}

195 api_call = '/serengeti/api/cluster/' + cluster_name

196 url = prefix + bde_server + port + api_call

197 r = curr.get(url, headers=header, verify=False)

198 raw_json = json.loads(r.text)

199 cluster_data = raw_json["nodeGroups"]

I then chose to iterate through the cluster_data variable and work through each one serially to gather the MAC addresses.

201 # Open connect to the vSphere API

202 si = SmartConnect(host=vcm_server, user=admin_user, pwd=admin_pass, port=443)

203 search_index = si.content.searchIndex

204 root_folder = si.content.rootFolder

205 for ng in cluster_data:

206 nodes = ng["instances"]

207 for node in nodes:

208 logger.info(_("VirtualElephant::VMware::BDE - Creating NSX port for %s") % node.get("name"))

209 vm_name = node.get("name")

210 vm_moId = node.get("moId")

211 port_name = vm_name + "-port0"

212

213 # moId is not in format we need to match

214 (x,y,z) = vm_moId.split(":")

215 vm_moId = "'vim." + y + ":" + z + "'"

216

217 # Go through each DC one at a time, in case there are multiple in vCenter

218 for dc in root_folder.childEntity:

219 content = si.content

220 objView = content.viewManager.CreateContainerView(dc, [vim.VirtualMachine], True)

221 vm_list = objView.view

222 objView.Destroy()

223

224 for instance in vm_list:

225 # convert object to string so we can search

226 i = str(instance.summary.vm)

227 if vm_moId in i:

228 # Matched the VM in BDE and vCenter

229 logger.info(_("VirtualElephant::VMware::BDE - Match found for BDE node %s") % instance)

Once the match is found, then we get the MAC address:

230 for device in instance.config.hardware.device:

231 if isinstance(device, vim.vm.device.VirtualEthernetCard):

232 mac_address = str(device.macAddress)

233 logger.info(_("VirtualElephant::VMware::BDE - Found MAC address %s") % mac_address)

Now it is possible for the MAC address to be used to create the Neutron port, adding it in properly to NSX-v, enabling BDE nodes to be able to access the network properly.

250 # Create a new port through Neutron

251 neutron = client.Client('2.0',

252 username=os_username,

253 password=os_password,

254 auth_url=os_auth_url,

255 tenant_name=os_tenant_name,

256 endpoint_url=os_url,

257 token=os_token)

258 port_info = {

259 "port": {

260 "admin_state_up": True,

261 "device_id": vm_name,

262 "name": port_name,

263 "mac_address": mac_address,

264 "network_id": network_id

265 }

266 }

267 logger.info(_("VirtualElephant::VMware::BDE - Neutron port string %s") % port_info)

268

269 response = neutron.create_port(body=port_info)

270 logger.info(_("VirtualElephant::VMware::BDE - NSX port creation response - %s") % response)

Conclusion

There remain some timing issues with creating the Neutron ports and getting the BDE nodes to be able to get a DHCP IP address. Depending on ‘quickly’ the virtual machines are reconfigured and being loading the OS, the Neutron ports will enable the network traffic. If the OS is already to the point where it is trying to get a DHCP address, then the virtual machine has to be reset/rebooted to get the address. The issue here is how do you know which stage the VM is in the BDE deployment process? This is the issue I continue to work through and I have not found a resolution yet.

If you have ideas, please reach out and let me know. I am hoping to solve it through the resource plugin, but ultimately I believe a more elegant solution will involve BDE to handle the NSX-v updates.